Stitching DeiTs

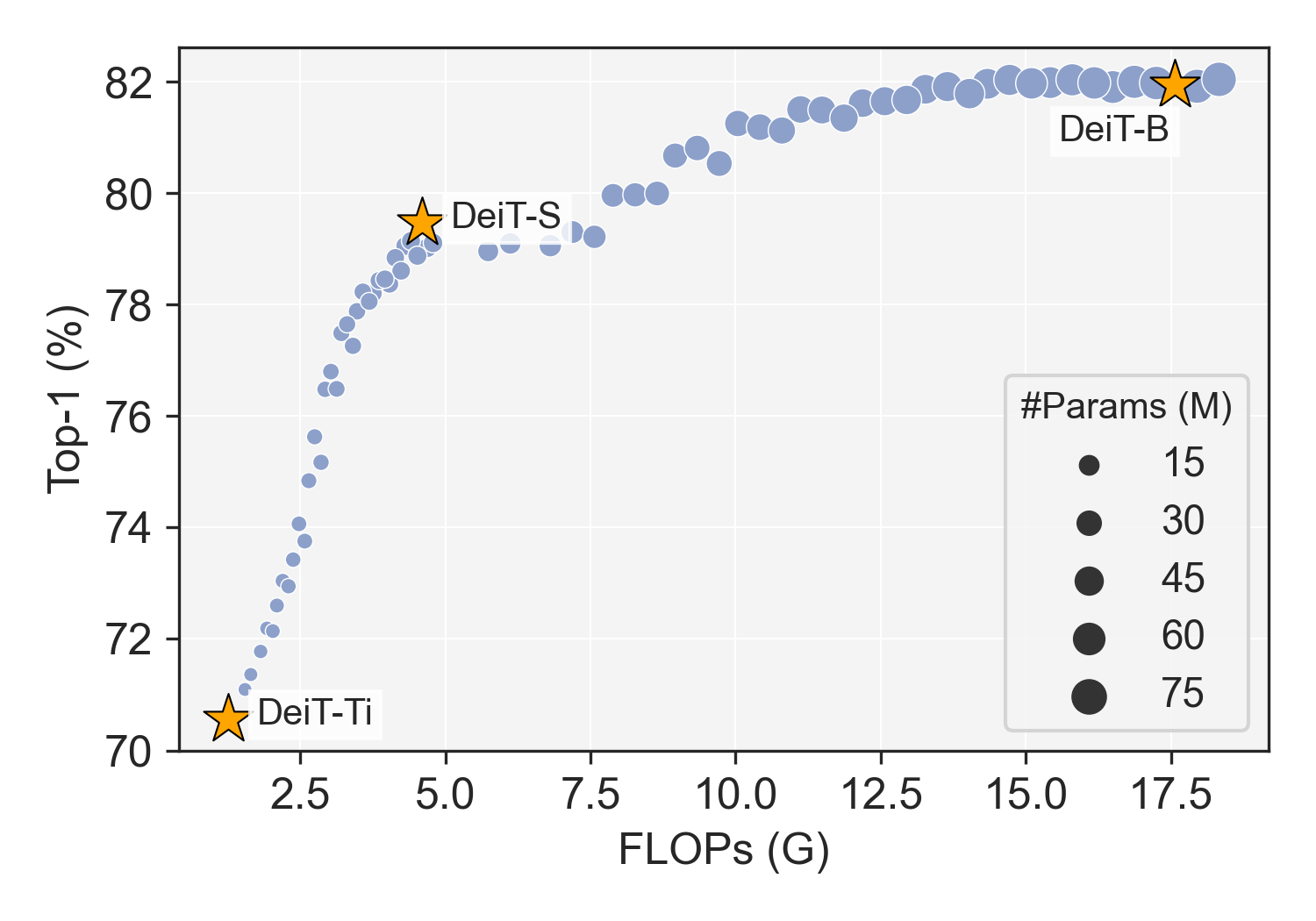

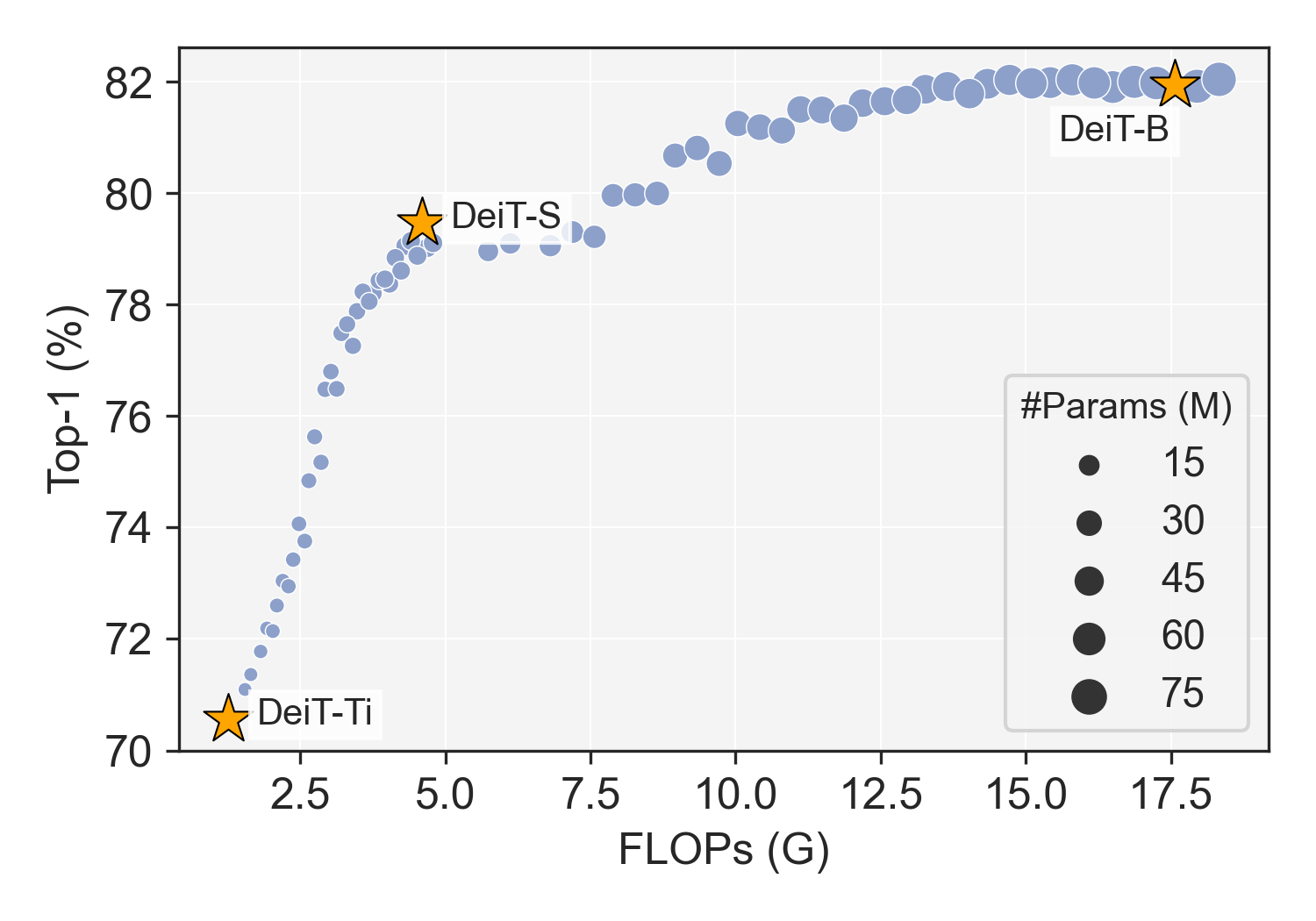

Assembling pretrained DeiT-Ti/S/B on ImageNet-1K with 50 epochs training.

One Stitchable Neural Network v.s 200 models in Timm model zoo. It shows an example of SN-Net by stitching ImageNet-22K pretrained Swin-Ti/S/B. Compared to each individual network, SN-Net is able to instantly switch network topology at runtime and covers a wide range of computing resource budgets. Larger dots indicate a larger model with more parameters and higher complexity. Click the legend label (e.g., Timm Models and Stitched Nets) for selective visualization. Hover the dots to check the detail of each model.

The public model zoo containing enormous powerful pretrained model families (e.g., ResNet/DeiT) has reached an unprecedented scope than ever, which significantly contributes to the success of deep learning. As each model family consists of pretrained models with diverse scales (e.g., DeiT-Ti/S/B), it naturally arises a fundamental question of how to efficiently assemble these readily available models in a family for dynamic accuracy-efficiency trade-offs at runtime.

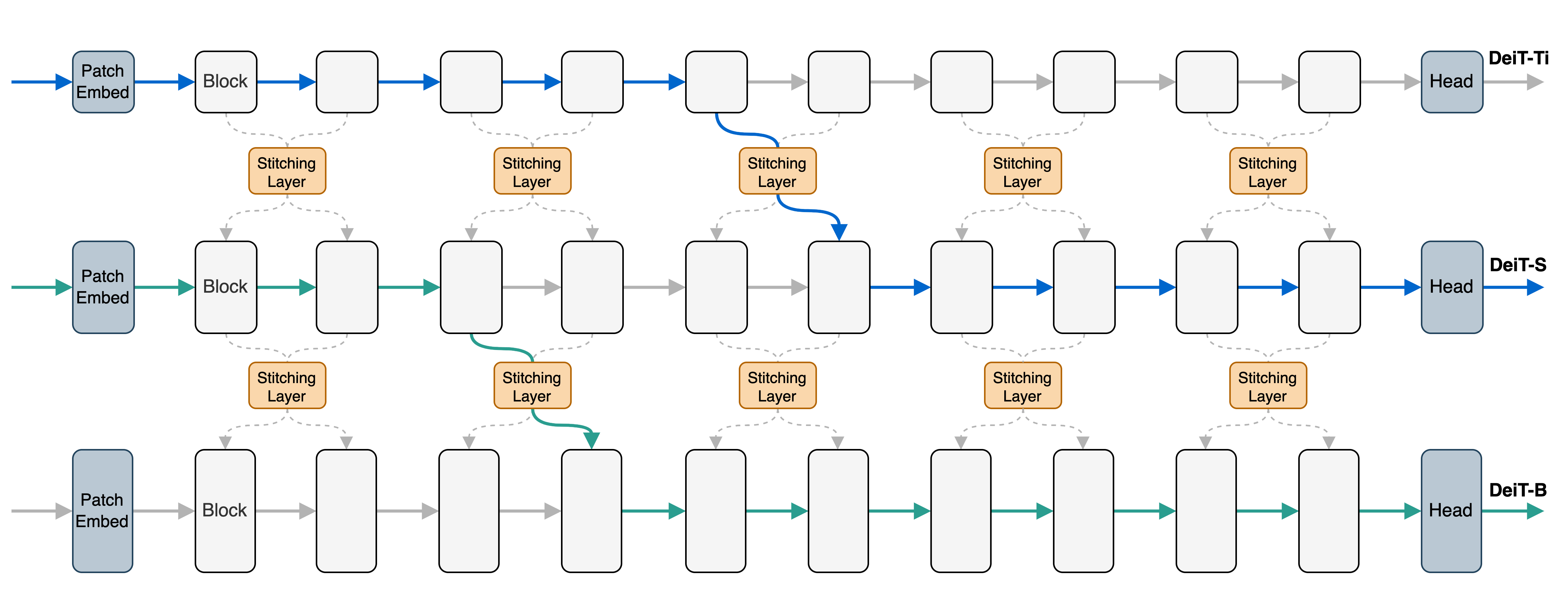

To this end, we present Stitchable Neural Networks (SN-Net), a novel scalable and efficient framework for model deployment. It cheaply produces numerous networks with different complexity and performance trade-offs given a family of pretrained neural networks, which we call anchors. Specifically, SN-Net splits the anchors across the blocks/layers and then stitches them together with simple stitching layers to map the activations from one anchor to another.

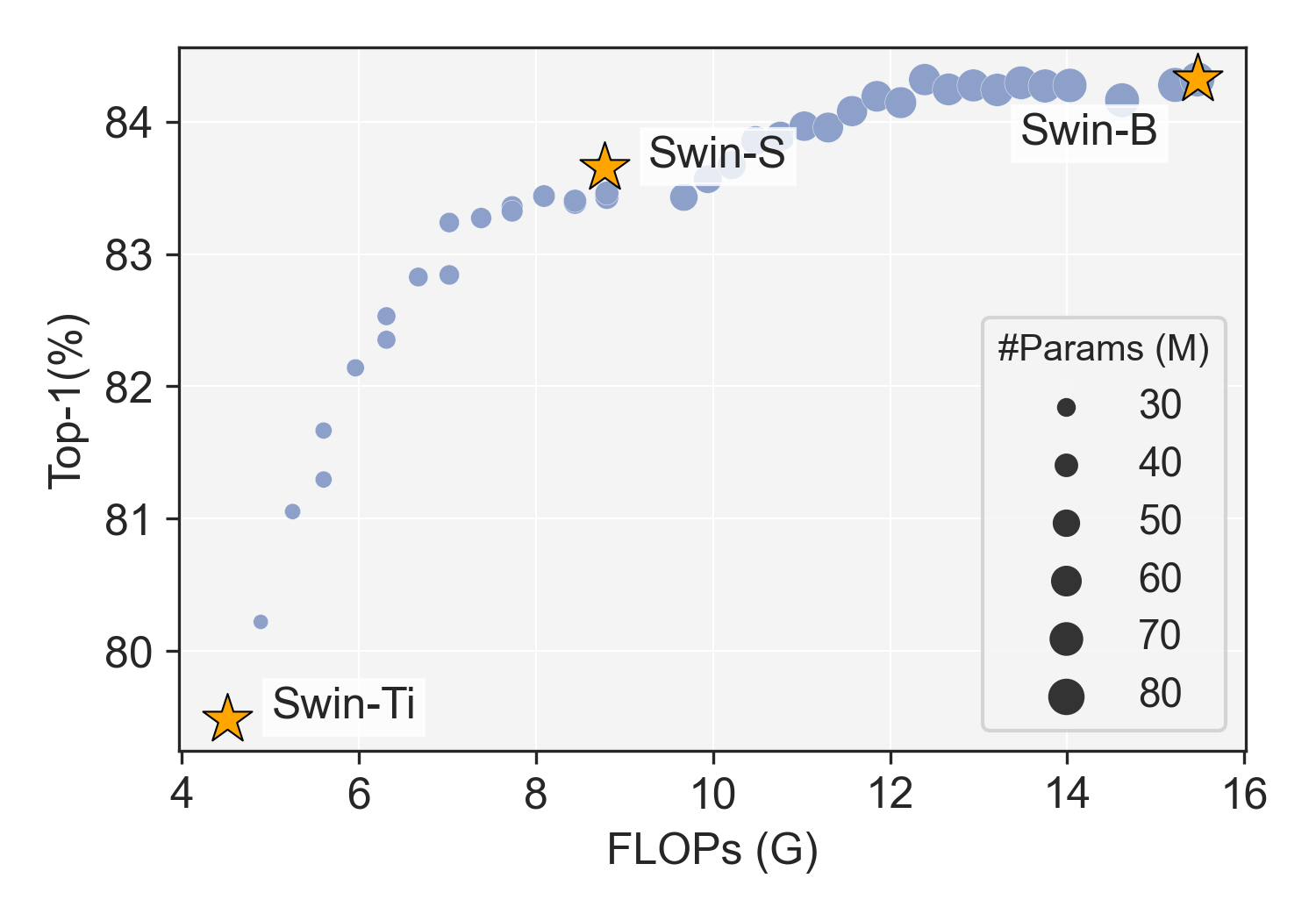

With only a few epochs of training, SN-Net effectively interpolates between the performance of anchors with varying scales. At runtime, SN-Net can instantly adapt to dynamic resource constraints by switching the stitching positions. Extensive experiments on ImageNet classification demonstrate that SN-Net can obtain on-par or even better performance than many individually trained networks while supporting diverse deployment scenarios. For example, by stitching Swin Transformers, we challenge hundreds of models in Timm model zoo with a single network. We believe this new elastic model framework can serve as a strong baseline for further research in wider communities.

Illustration of the proposed Stitchable Neural Network, where three pretrained variants of DeiTs are connected with simple stitching layers (1x1 convolution). We share the same stitching layer among neighboring blocks (e.g., 2 in this example) between two models. Apart from the basic anchor models, we obtain many sub-networks (stitches) by stitching the nearest pairs of anchors in complexity, e.g., DeiT-Ti and DeiT-S (the blue line), DeiT-S and DeiT-B (the green line).

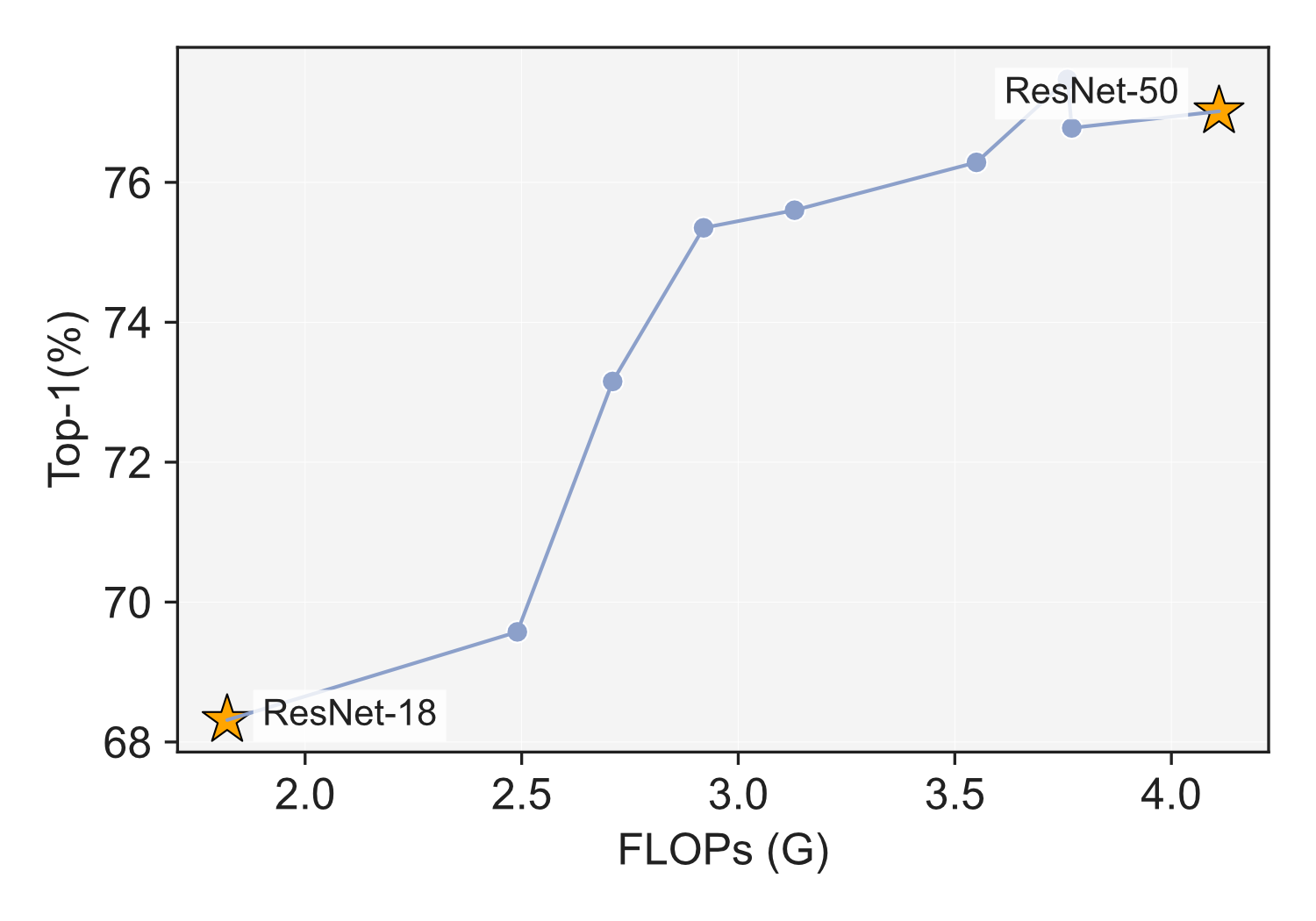

Assembling pretrained DeiT-Ti/S/B on ImageNet-1K with 50 epochs training.

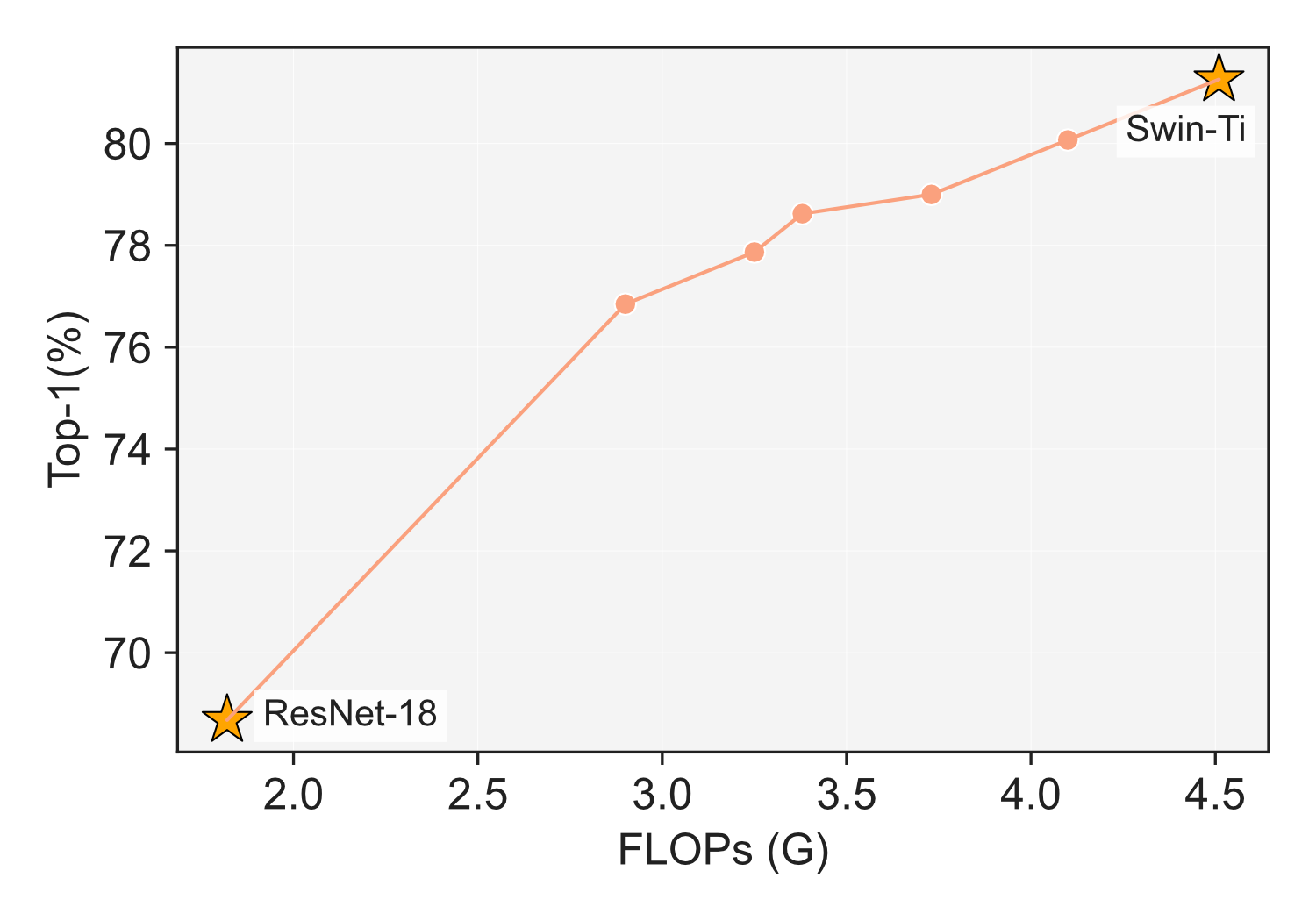

Assembling pretrained Swin-Ti/S/B on ImageNet-1K with 50 epochs training

Assembling pretrained DeiT-Ti/S/B on ImageNet-1K with 50 epochs training.

Assembling pretrained Swin-Ti/S/B on ImageNet-1K with 50 epochs training

If you like the project, please show your support by leaving a star 🌟 !

@inproceedings{pan2023snnet,

author = {Pan, Zizheng and Cai, Jianfei and Zhuang, Bohan},

title = {Stitchable Neural Networks},

booktitle = {CVPR},

year = {2023},

}